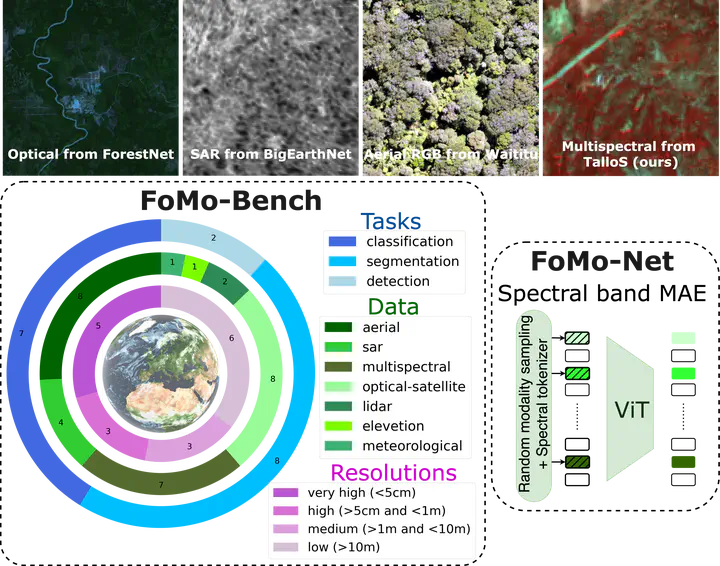

FoMo-Bench: a multi-modal, multi-scale and multi-task Forest Monitoring Benchmark for remote sensing foundation models

Abstract

Forests are an essential part of Earth’s ecosystems and natural systems, as well as providing services on which humanity depends, yet they are rapidly changing as a result of land use decisions and climate change. Understanding and mitigating negative effects requires parsing data on forests at global scale from a broad array of sensory modalities, and recently many such problems have been approached using machine learning algorithms for remote sensing. To date, forest-monitoring problems have largely been approached in isolation. Inspired by the rise of foundation models for computer vision and remote sensing, we here present the first unified Forest Monitoring Benchmark (FoMo-Bench). FoMo-Bench consists of 15 diverse datasets encompassing satellite, aerial, and inventory data, covering a variety of geographical regions, and including multispectral, red-green-blue, synthetic aperture radar (SAR) and LiDAR data with various temporal, spatial and spectral resolutions. FoMo-Bench includes multiple types of forest-monitoring tasks, spanning classification, segmentation, and object detection. To further enhance the diversity of tasks and geographies represented in FoMo-Bench, we introduce a novel global dataset, TalloS, combining satellite imagery with ground-based annotations for tree species classification, spanning 1,000+ hierarchical taxonomic levels (species, genus, family). Finally, we propose FoMo-Net, a foundation model baseline designed for forest monitoring with the flexibility to process any combination of commonly used sensors in remote sensing. This work aims to inspire research collaborations between machine learning and forest biology researchers in exploring scalable multi-modal and multi-task models for forest monitoring. All code and data will be made publicly available.

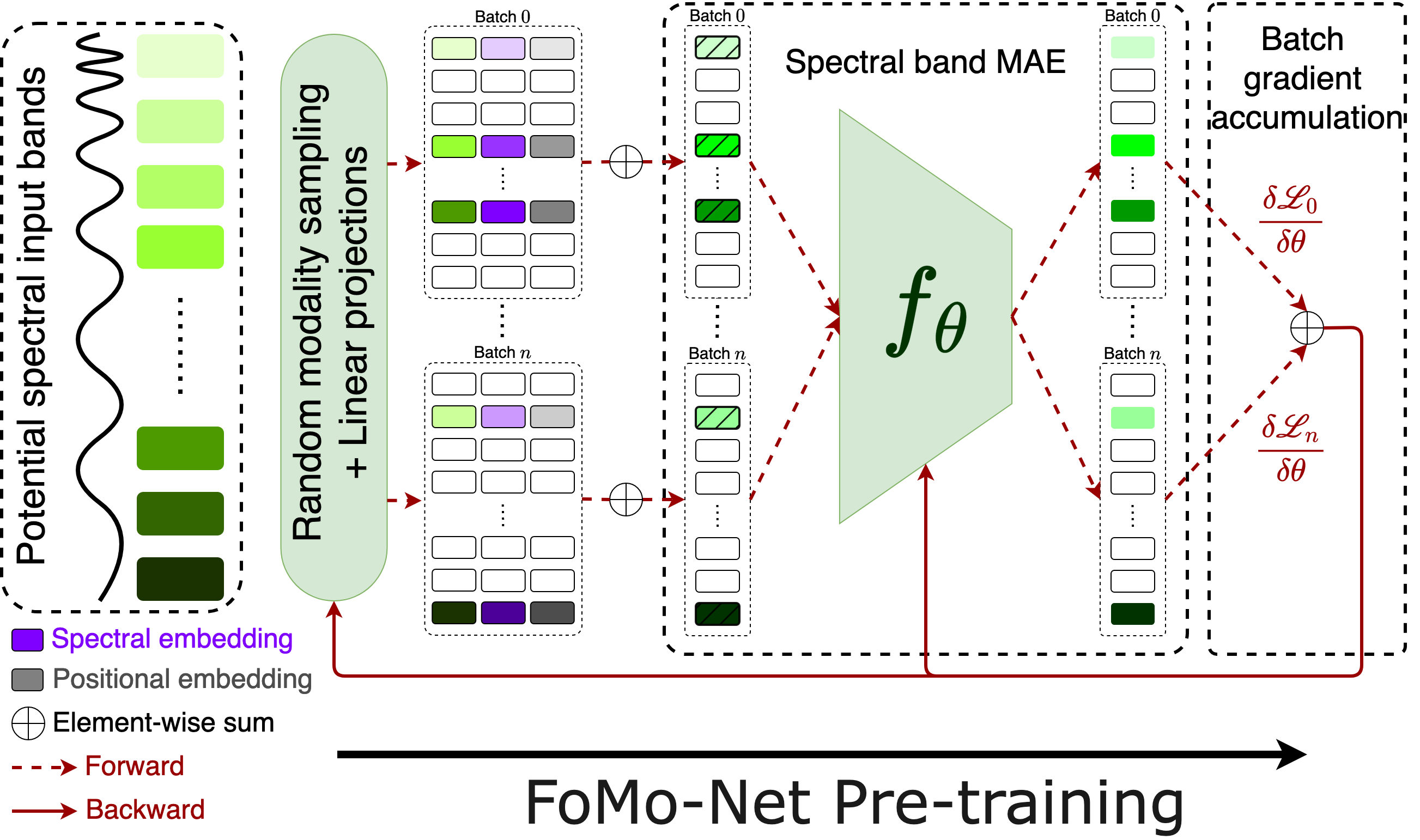

FoMo-Net pretraining framework

FoMo-Net pretraining framework